Demand for AI application development services in the USA is exploding. The USA AI market size hit USD 638.23 billion in 2024, with projections pointing to sustained strong growth. Yet success is rare: 80 % or more of AI projects fail or never reach production. For generative AI, 95% of implementations produce no measurable impact on business P&L.

Why do so many efforts collapse? Is it poor data? Weak integration? Scope creep? Or gaps in deployment strategy?

You don’t have to be another statistic. With structured AI application development services, you get a clear path: define real use cases, build testable prototypes, integrate systems reliably, deploy models at scale, and maintain them over time. Along the way, you tap AI software development services for supporting modules like dashboards, APIs, and data pipelines.

This blog walks you through how USA providers deliver AI application development services, what tech they use, how pricing works, and how to pick a vendor you can trust.

The Typical Process Flow of AI Application Development Services in the USA

Every provider structures the AI app lifecycle differently, but most executions follow a predictable progression. Understanding this flow helps you evaluate proposals and spot missing pieces before signing a contract.

Step 1. Discovery, Use-Case Definition and Feasibility Study

The first checkpoint is clarifying why the AI feature should exist. Providers assess cost savings, productivity uplift, or potential revenue based on your process data. A feasibility scan follows to confirm whether usable datasets, domain permissions, or compliance approvals are already in place. Depending on available inputs, the scope is trimmed into a pilot, MVP, or full rollout.

Step 2. Design, Architecture, and Prototyping

Once the case is validated, teams create interaction flows for chat, recommendation, or visual features. This step avoids confusion later by separating the model layer, API layer, and user interface. Instead of jumping into full-scale development, a small prototype or PoC validates performance expectations.

Step 3. Data Engineering and Model Development

Most delays happen here. Data is collected, cleaned, labeled, and converted into structured formats that models can consume. Engineers perform feature engineering or use pre-built embeddings when applicable. Models are trained or fine-tuned with proper version control, experiment logs, and validation reports.

Step 4. Integration and Full App Development

After the model behaves well in isolation, it is linked with the frontend or backend stack. Authentication, governance, exception handling, and fallback logic are introduced to make the AI feature safe for users. Teams also choose between real-time or batch inference based on latency expectations and cloud budgets.

Step 5. Testing, Validation and QA

This is where confidence is earned. Model predictions are stress-tested against edge cases. Performance benchmarks include fairness scores, uptime stability, and integration resilience. Human review loops and A/B experiments help measure real-world behavior before scaling.

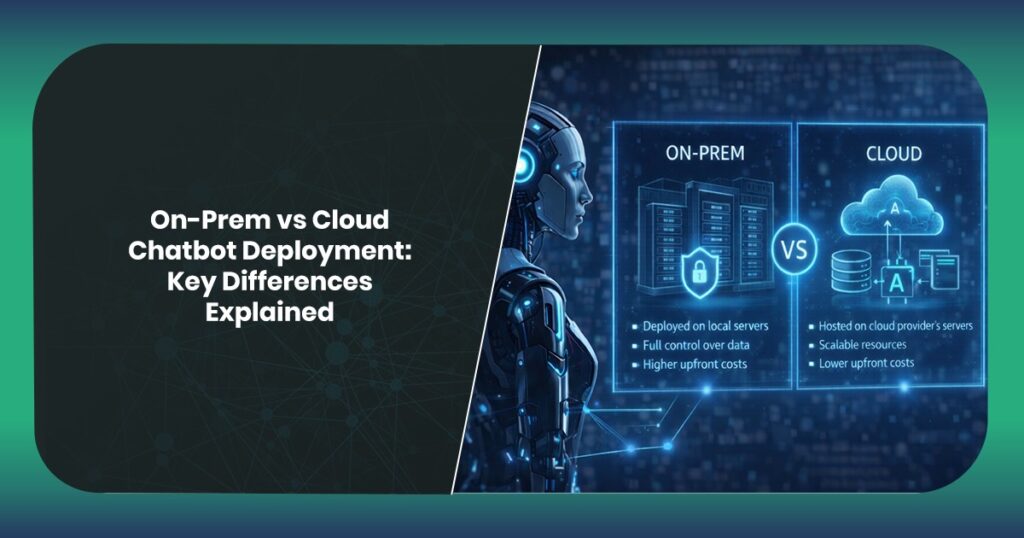

Step 6. Deployment, Monitoring and Maintenance

The final step pushes models into cloud AI development or hybrid infrastructure. Monitoring begins immediately to observe model drift, latency spikes, or error surges. Maintenance tasks include retraining cycles, rollback plans, and version patching based on live feedback.

Key Technologies and Tools Powering USA’s AI Application Development Services

Most providers differentiate themselves through tooling more than talent. Understanding the stack gives you a clear view of what level of sophistication to expect and how maintainable your system will be post-launch.

1. Natural Language Processing (NLP) Frameworks

Modern text analysis relies on NLP frameworks that perform tokenization, entity extraction, summarization, and classification at scale. These systems convert raw text into structured insights by breaking down syntax and semantics. Here are some common NLP frameworks that are used:

- spaCy: Fast entity recognition, dependency parsing, and part-of-speech tagging for production-grade NLP pipelines.

- NLTK: Used in research and prototyping for linguistic processing, stemming, and corpus-based analysis.

- Stanford CoreNLP: Provides deep linguistic parsing, sentiment scoring, and co-reference resolution via Java APIs.

- OpenAI GPT Models (via API): Handles summarization, classification, and multi-language processing when paired with prompt logic.

2. Machine Learning & Classification Platforms

Once text is parsed, machine learning models categorize intent, predict sentiment, or detect spam, risk, and anomalies. These systems rely on supervised or zero-shot classification. Here are some ML and classification platforms that are used:

- Scikit-learn: Traditional ML-based classifiers (SVMs, Naive Bayes) for fast training on labeled datasets.

- Hugging Face Transformers: Pretrained models (BERT, RoBERTa, DistilBERT) for high-accuracy text classification.

- TensorFlow / PyTorch: Fully flexible frameworks for training custom models and fine-tuning deep learning architectures.

- AWS Comprehend / Google Cloud Natural Language API: Cloud-based classification without infrastructure setup.

3. Text Mining & Search Indexing Engines

At scale, unstructured text needs to be converted into retrievable formats using techniques like inverted indexing, TF-IDF scoring, BM25 ranking, and vector-based semantic search. Traditional keyword search engines rely on tokenization and frequency analysis, while newer systems store dense embeddings to detect meaning even when exact keywords don’t match. These engines act as the backbone for real-time querying, document retrieval, and question-answering pipelines across support logs, compliance archives, and knowledge bases.

Here are some common text mining and search indexing engines that are used:

- Elasticsearch: Full-text search, keyword clustering, and real-time filtering with scalable inverted indexing.

- Apache Solr: Query-based document retrieval with faceted navigation and BM25-based scoring.

- Vectara / Pinecone / Weaviate: Vector indexing for semantic search using dense embeddings from transformer models.

- LangChain + Vector Stores: Retrieval-augmented pipelines that let LLMs pull grounded facts from document databases instead of hallucinating.

4. Annotation & Labeling Platforms

Before models can learn, raw data must be structured into machine-readable formats through manual or semi-automated labeling. Modern platforms support multi-class classification, entity tagging, bounding box selection, and relationship mapping, often incorporating active learning loops where models suggest probable labels to reduce human effort. Advanced systems also enforce inter-annotator agreement scoring, consensus validation, and audit trails to maintain dataset reliability at scale.

Here are some popular annotation platforms used:

- Labelbox: Workflow-based labeling with annotator assignment, consensus scoring, and QA checkpoints.

- Prodigy: Active learning with assisted labeling that auto-suggests tags for faster acceptance/rejection.

- Amazon SageMaker Ground Truth: Managed workforce plus rule-based automation for repetitive annotations.

- Doccano: Open-source interface for entity recognition, text classification, and relation tagging.

5. Sentiment & Emotion Analytics Engines

Basic polarity scoring is no longer enough because modern systems rely on fine-grained emotion tagging, transformer-based tone detection, and context-aware sarcasm handling. These engines analyze word embeddings, punctuation patterns, and metadata signals to classify urgency, frustration, enthusiasm, or compliance risk in conversations. Some also provide aspect-based sentiment, isolating opinions tied to specific product features or service touchpoints.

Here are some common tools used for sentiment and emotion analysis:

- Lexalytics: Granular sentiment, intent, and aspect scoring across structured and unstructured text.

- Brandwatch / Sprinklr: Social listening dashboards with emotion segmentation and volume trends.

- MonkeyLearn: No-code sentiment classifiers for customer support, reviews, and inbound tickets.

- IBM Watson NLU: Pre-trained emotion recognition for states like anger, joy, fear, or sadness.

Cost Models & Pricing for AI Application Development Services in the USA

Building with AI application development services in USA is no longer reserved for Fortune 500 budgets, but cost expectations still vary depending on use case and deployment scale.

Buyers often struggle to distinguish between prototype pricing and production-grade investment, which leads to underbudgeting midway through delivery.

To prevent that, it helps to break the economics into four layers: baseline build ranges, core drivers, pricing structures, and long-term operational spend.

Price Ranges & Benchmarks

Pricing for AI application development services in the USA varies based on scope and model complexity, but current market studies show consistent patterns. Here’s a table that mentions the price range as per the project type:

| Project Type | Cost | What Does it Include? |

| Proof of Concept (PoC) | $10,000-$50,000 | Typically covers a limited dataset, basic integration, and a lightweight interface. |

| Production-Ready Build | $50,000-$250,000 | Includes APIs, user roles, dashboards, cloud hosting, and monitoring. |

| Enterprise-Grade Platforms | $150,000-$1M+ | Costs increase sharply once legal compliance, multimodal features, or proprietary model licensing are involved. |

Businesses building regulated or domain-specific products (finance, healthcare, legal) should expect higher spend due to validation cycles and audit constraints.

What are the Major Cost Drivers?

The price of AI application development services in the USA isn’t dictated by coding effort alone. Most of the spend comes from the underlying data strategy, model selection, and infrastructure footprint. Key elements that typically push budgets up or down include:

- Model Architecture & Multimodality: Transformer-based or multi-input systems (text + image + speech) require higher compute and longer optimization cycles.

- Data Labeling Volume & Quality Prep: Cleaning, annotating, and validating raw records, especially in medical or legal datasets, can consume 30-40% of total spend.

- GPU Infrastructure & Inference Hosting: Training large models or hosting real-time inference routes leads to recurring cloud charges based on GPU hours.

- Third-Party Model Licensing & API Usage: Platforms like OpenAI or Anthropic add variable, volume-based costs that scale with user traffic.

- Compliance & Audit Requirements: Industries under HIPAA, SOC 2, or GDPR need audit logs, encryption layers, and access controls — all requiring senior engineering time.

- Specialized Talent Involvement: Data scientists, ML engineers, and MLOps specialists typically command higher hourly rates than full-stack developers.

What are Hidden & Ongoing Costs?

Most projects assume the spend stops after launch, but AI application development services in the USA carry recurring operational expenses that quietly accumulate over time. To avoid budget shocks, teams should factor in:

- Cloud Inference Consumption: Model usage bills scale with user traffic, especially for LLM-based chat or recommendation features.

- Retraining & Drift Correction: Models degrade as user behavior shifts. Regular fine-tuning or full retraining is required to maintain accuracy.

- API & Dataset Subscriptions: External services (speech-to-text, embeddings, news feeds, identity checks) come with monthly or per-call fees.

- Feature Expansion Requests: Once users adopt the system, new intents, entities, or workflows often get added, increasing engineering hours.

- Data Pipeline Maintenance: When input sources change format, ingestion scripts break, requiring quick patching to avoid outages.

- Explainability & Fairness Reports: Regulated sectors may request bias audits or interpretability reports at scheduled intervals.

Pricing Models Used by Service Providers

Most vendors offering AI application development services in USA use one of three commercial models:

- Time and Materials (T&M): Flexible but unpredictable for clients with unclear scope.

- Fixed-Bid Milestones: Safer for well-defined builds.

- Hybrid Contracts: Increasingly common. The first phase is fixed for research and solution design, then T&M for ongoing training and iteration once the scope stabilizes.

How to Evaluate & Select a USA AI Application Development Vendor?

Choosing a provider is often harder than building the product. Many firms advertise AI application development services in the USA, but only a fraction can deliver production-grade systems that scale under real usage. A structured evaluation process helps filter presentation-heavy vendors from outcome-driven teams.

1. Domain Experience & Case Studies

Start by checking if the vendor has shipped solutions in your vertical — healthcare, finance, logistics, retail, or public services. Ask for sanitized demos that show before-and-after impact. Track measurable results such as response latency, precision/recall lift, or end-user activation rates. A vendor that shares failure lessons is more reliable than one offering only success slides.

2. Technical Depth & Team Composition

A complete delivery squad should include data engineers, ML developers, an MLOps specialist, a frontend/backend pair, a product manager, and a compliance lead. Clarify if they fine-tune models internally or depend entirely on external APIs. Teams built around one freelancer or outsourced contractor chains rarely manage full lifecycle ownership.

3. Process Transparency & Communication

The provider should give visibility into sprints, release calendars, and backlog items. Regular demos, weekly or bi-weekly, keep stakeholders involved without surprises. Confirm how feedback is logged, approved, and merged into future iterations. A working channel (Slack, Teams, Jira) should stay open for quick escalation.

4. Risk Mitigation & Contracts

Contracts must specify IP transfer, uptime guarantees, rollback options, and support cover. Source code should be escrowed or granted via private repo access. Align compliance clauses with your internal security standards before signing.

5. Scalability & Future Growth

Systems should be modular enough to add new features without extensive refactors. Validate if the architecture supports GPU scaling, database expansion, or swapping base models later. Ask if the team has successfully upgraded apps from classifiers to agent-style workflows.

FAQs

Q1: How long does it take to build an AI application in the USA?

Most builds fall between 3-6 months for a basic release. Multi-feature enterprise apps with integrations or compliance reviews can stretch to 9-18 months.

Q2: Can I start with a smaller PoC before committing to full AI application development services in the USA?

Yes. Most providers structure projects in phases so you can validate outputs early and expand later without rework.

Q3: Do USA vendors allow offshore or hybrid engineering teams?

Many do. Core architects often sit in the U.S. while data labeling or module builds are handled offshore to manage cost.

Q4: What security standards should be expected in production-ready AI software?

HIPAA, SOC 2, and CCPA compliance are common requirements. Encryption, access logs, and audit records should be enforced by default.

Q5: How can I control cloud costs once the AI model goes live?

Use autoscaling, batched inference, pruning, and usage-based pricing. Smaller distilled models are often cheaper to host without losing accuracy.

Q6: What’s the difference between AI software development services and AI application development services?

Software development may refer only to model builds. AI application development services in the USA cover the full stack, interface, deployment, monitoring, and long-term support.